Technology has advanced exponentially since the release of the first personal computer in 1974, but these vintage photos capture the time before handheld devices and social media.

Computers are an inescapable part of everyday life for many people today, so much so that it can be easy to forget just how recently, historically speaking, they became a part of our lives. Even 40 years ago, typewriters were still a fairly common fixture of corporate offices, and phones could only be taken as far as a cord would allow.

The idea that we would one day carry phones as slim as coasters and more powerful than the computers that put mankind on the Moon seemed like science fiction.

Yet technology rapidly evolved. What began as room-sized machines designed for military and academic use quickly gave way to desktop units that revolutionized business, communication, and entertainment. By the late 20th century, personal computers had ushered in the digital age, seamlessly integrating into everyday life and making way for the rise of smartphones, laptops, and cloud services.

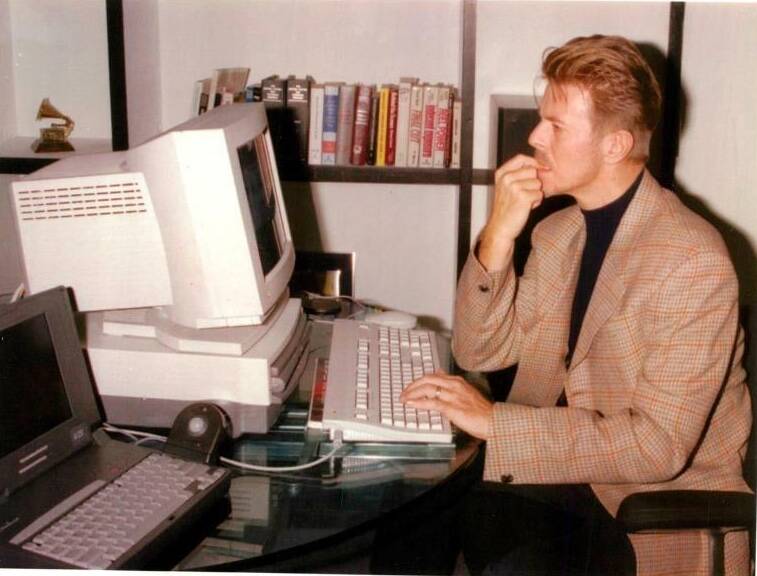

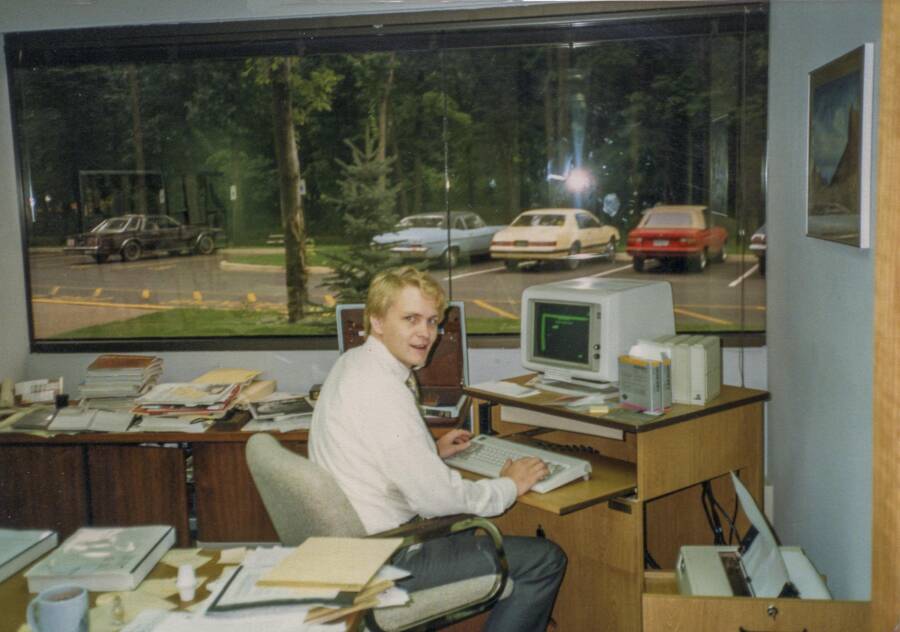

In just a few short decades, these machines became central to how humans interact, work, and create, for better or for worse. Some might even argue that we are too dependent on these sleek, ultra-fast machines — and there is a certain nostalgic charm to the chunky, white plastic CRT displays that used to adorn our desks.

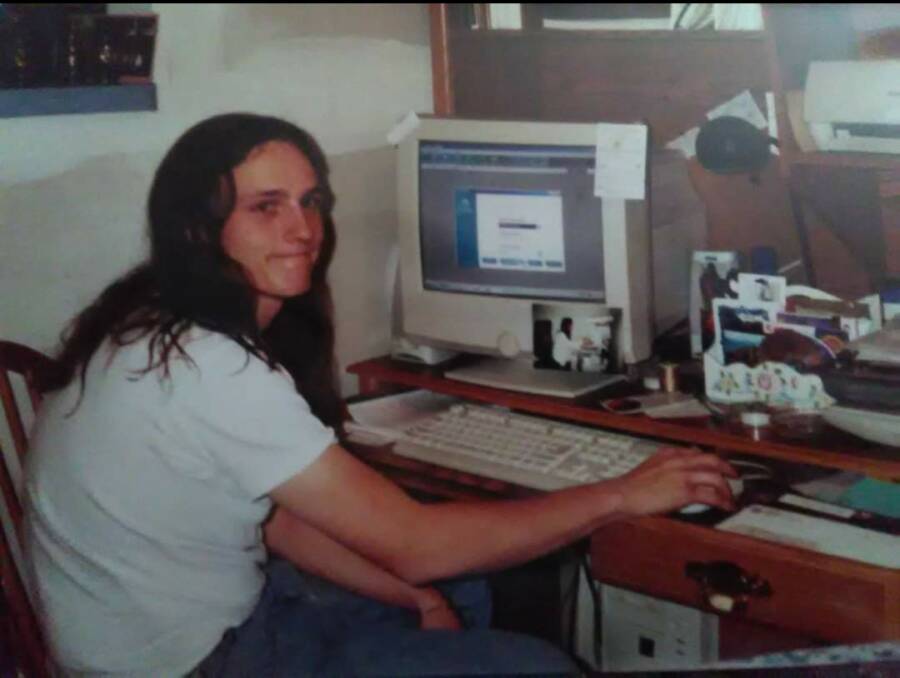

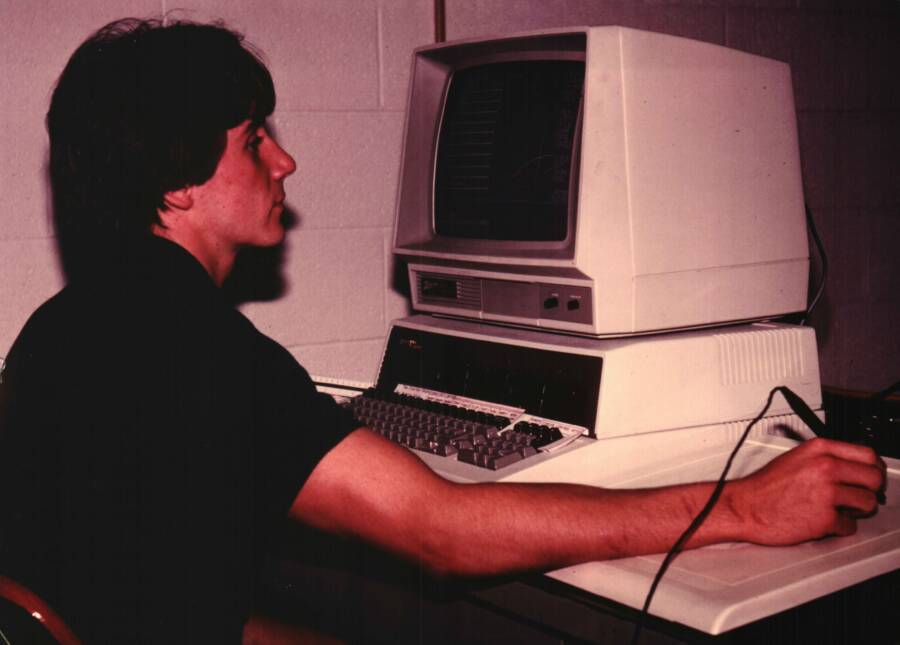

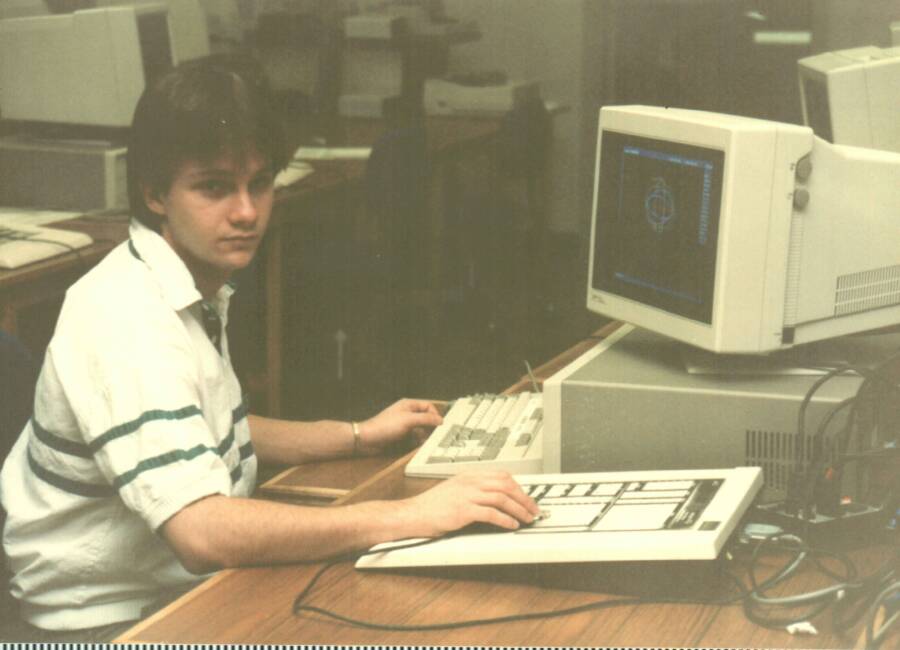

Take a trip back down memory lane and see how computers became so central to daily life through our gallery of vintage photos below:

The Invention Of The Computer

Although they took some time to come to fruition, the roots of modern personal computers trace back to early 20th-century advances in computing theory and technology. One man in particular provided the theoretical foundation for these machines: Alan Turing.

Around 1936, Turing began to conceptualize what he called the "universal machine," a theoretical construct introduced in his paper On Computable Numbers.

Without getting too bogged down in the details, Turing's universal machine was not a physical device but a mathematical model of computation.

The general idea was that a single abstract machine could simulate the logic of any other computing machine, provided it had the right instructions. It operated on an infinite tape divided into squares, from which it could read both data and instructions — essentially, a machine capable of running multiple programs, rather than needing a different machine for each task. Sound familiar?

Public DomainAlan Turing, the man behind the concept of the "universal machine."

Turing's theoretical machine offered a foundation, while World War II accelerated development with machines like the Colossus, which was used for code breaking, and ENIAC, one of the first general-purpose electronic computers. These devices were far removed from being considered "personal" devices, though. They were enormous, filling entire rooms and requiring teams of operators.

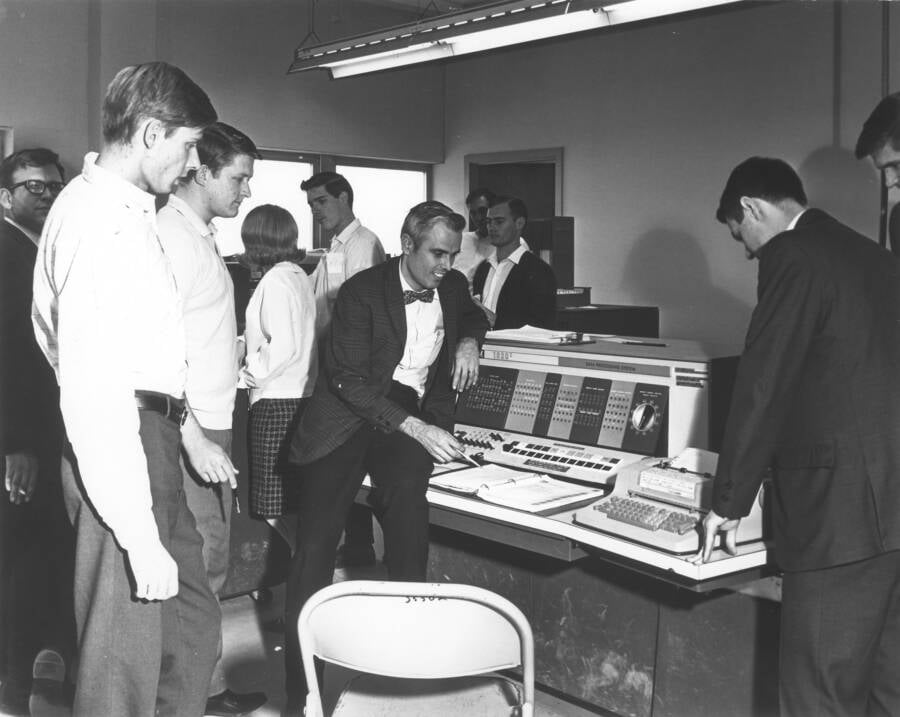

In the 1950s and 1960s, however, these machines gradually became smaller and smaller, thanks in part to the invention of the transistor in 1947 and the integrated circuit in 1958. These breakthroughs replaced bulky vacuum tubes, allowing computers to shrink in size while simultaneously becoming faster and more efficient.

They didn't offset the cost, though. Computers were still prohibitively expensive and typically only housed at universities, large corporations, or government agencies, where access was strictly limited.

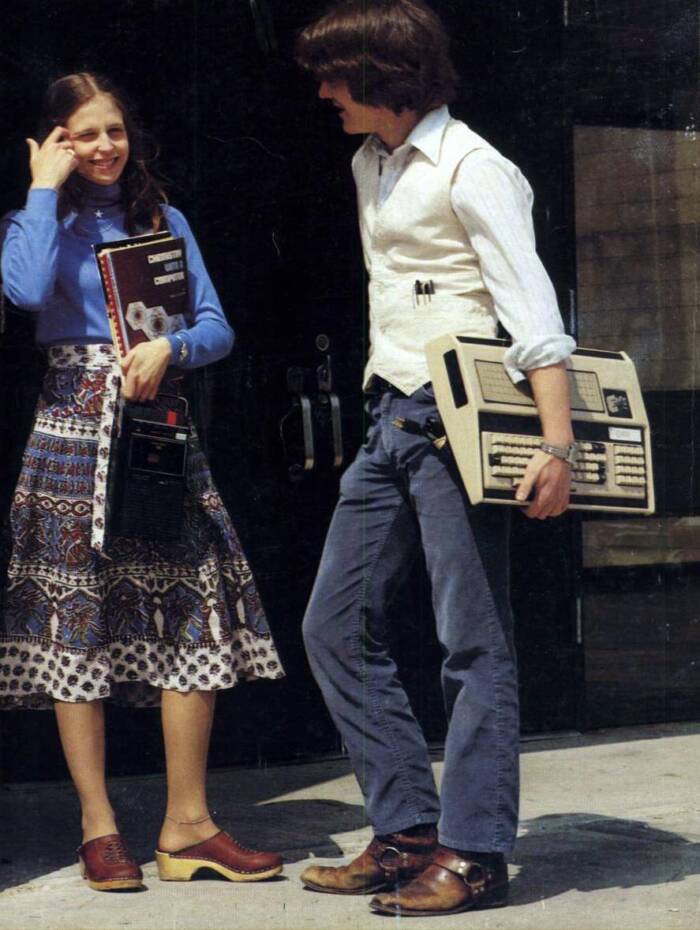

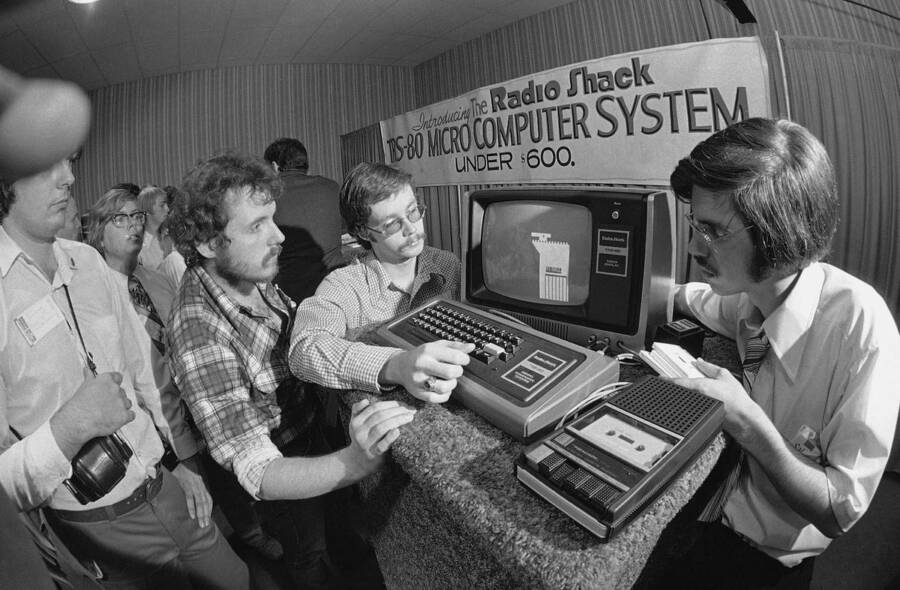

Come the 1970s, however, hobbyists and engineers began to see the potential for small, more affordable machines. When the Altair 8800 was introduced in 1974, many saw it as the first true personal computer. Around the same time, Bill Gates and Paul Allen created a version of BASIC software to run on the Altair, laying the foundation for Microsoft.

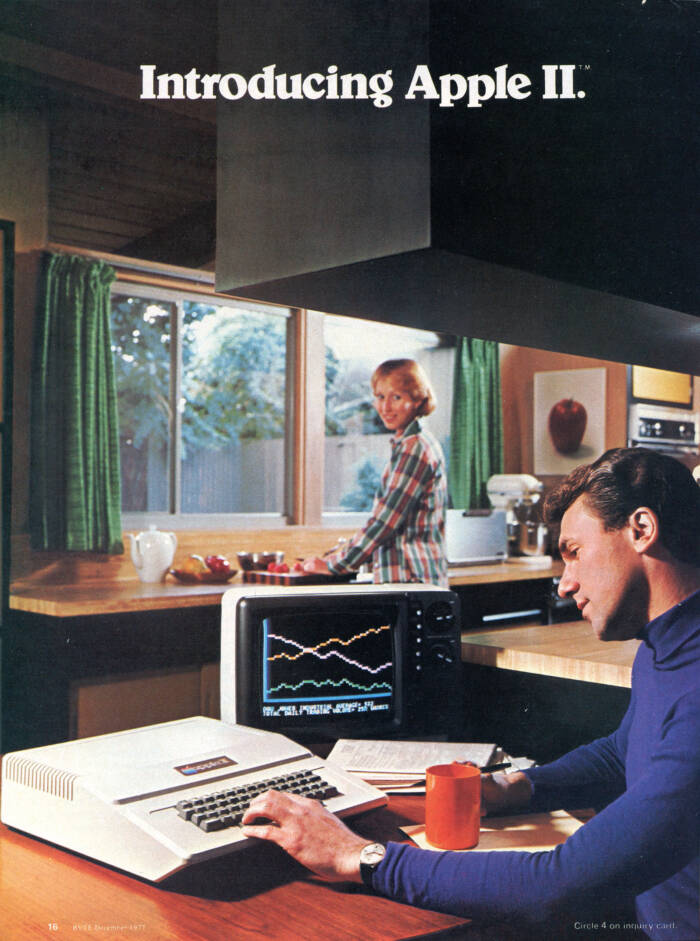

Soon enough, other influential systems started to crop up. Steve Jobs and Steve Wozniak founded Apple in 1976, and a year later, they released the Apple II, a pre-assembled machine marketed directly to consumers. A few years later, in 1981, IBM entered the field with the IBM PC, setting industry standards for hardware architecture.

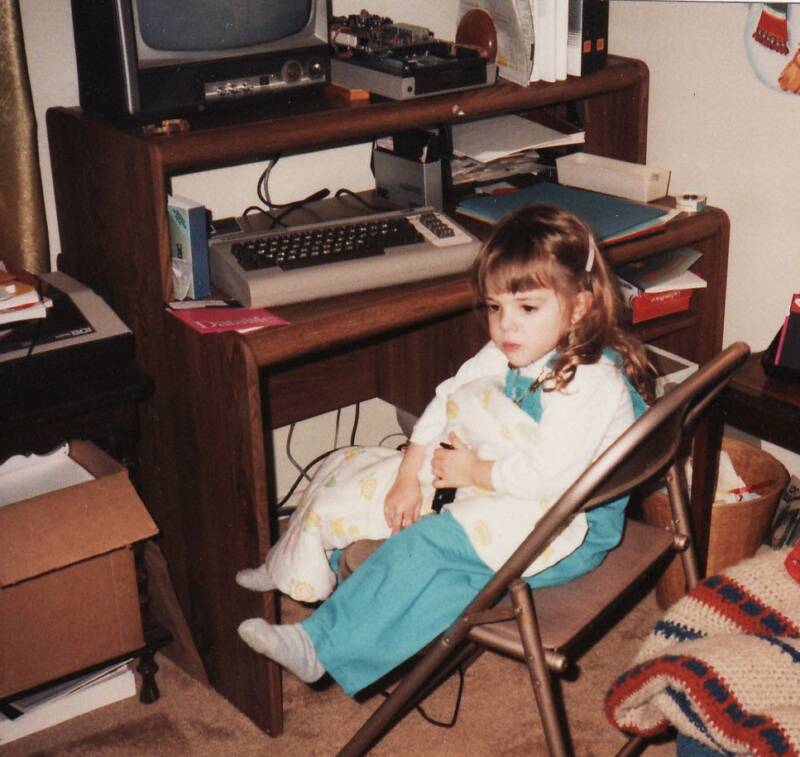

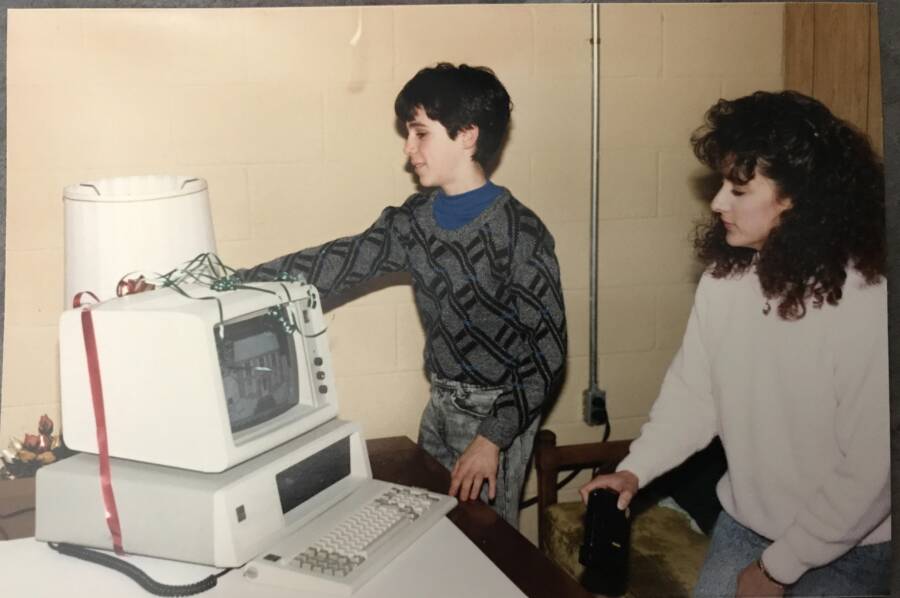

It was a huge turning point. Computers were no longer confined to labs and corporate offices: They were entering homes, schools, and small businesses.

Widespread Adoption In The 1980s And 1990s

Personal computers truly entered the mainstream in the 1980s. The IBM PC and its many "clones" quickly dominated the market, creating a competitive ecosystem of compatible machines.

Microsoft's MS-DOS operating system became the industry standard, ensuring software compatibility across brands, but it wasn't long before the company introduced Windows and popularized the graphical user interface (GUI), making computers more accessible to non-technical users. Apple, meanwhile, pursued a different strategy.

"What a computer is to me is it's the most remarkable tool that we've ever come up with, and it's the equivalent of a bicycle for our minds."

With the launch of the Macintosh in 1984, Apple delivered their own GUI-driven system — one that was years ahead of their competitors. That, coupled with their iconic Orwellian Super Bowl commercial announcing the Mac, symbolized the promise of a new, user-friendly era of computing. And while Apple's market share fluctuated, its emphasis on design and usability set industry-wide benchmarks.

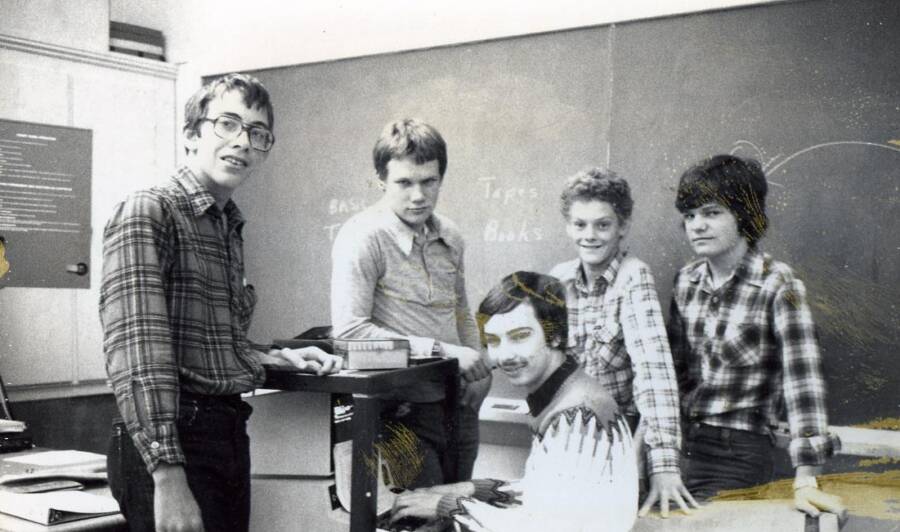

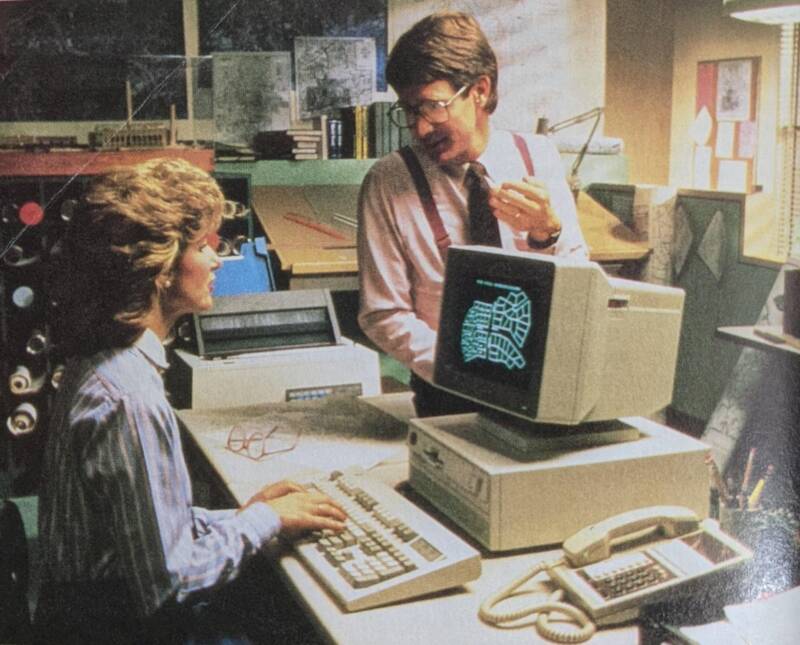

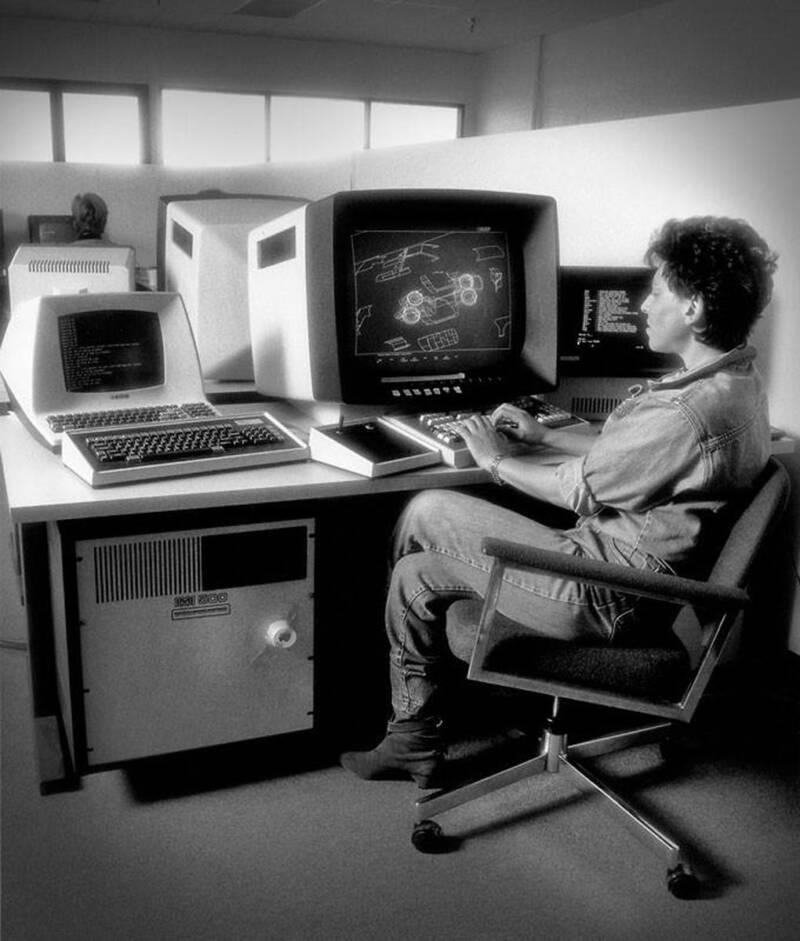

Throughout the 1980s, schools began equipping classrooms with computers, often Apple IIs or IBM-compatible machines. Word processing programs replaced typewriters, spreadsheets transformed accounting, and software like 1-2-3 and WordPerfect became workplace staples.

The rise of video games also helped establish computers as entertainment devices rather than just tools for productivity.

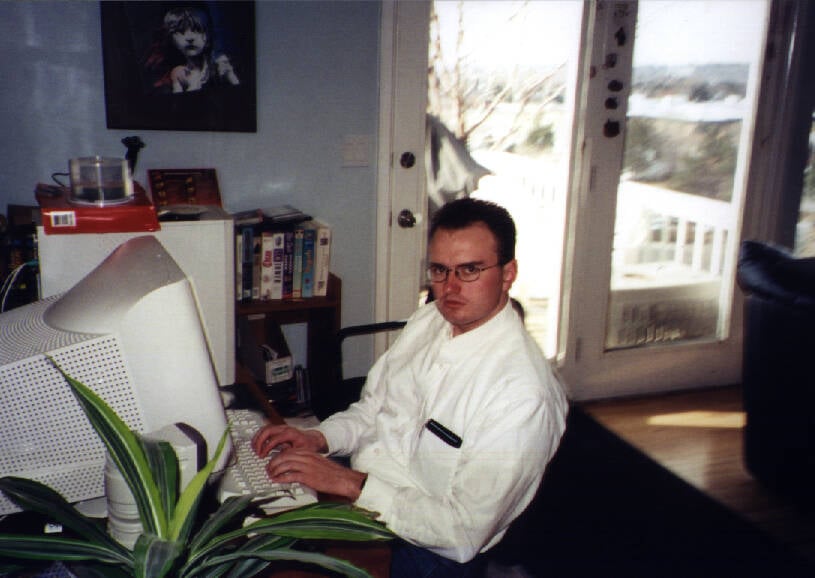

By the 1990s, the personal computer was fully integrated into the cultural mainstream. Faster processors, larger hard drives, and improved graphics cards made PCs capable of handling extremely complex tasks. Windows 95, which introduced the Start menu and taskbar, helped establish defining features of modern computers. And as dial-up gave way to broadband, browsers like Netscape and Internet Explorer opened new possibilities for sharing information, shopping online, and global connectivity.

Laptops also began to gain traction, thanks to lighter designs and longer battery life. Portability would, as we experience daily, become a focal point as the 21st century rolled around, leading to the modern era of computing as we know it.

Technology has come a long way since Turing first dreamed up his universal machine, bringing with it entirely new industries. These technologies haven't always been perfect — and there are certainly a number of concerns about how rapidly we are being dragged forward — but it is undeniable how fundamentally they have changed the world.

"I think it's fair to say that personal computers have become the most empowering tool we've ever created," Bill Gates once said. "They're tools of communication, they're tools of creativity, and they can be shaped by their user."

After this nostalgic look back at the rise of modern computers, remember the good old days of LAN parties through our photo collection. Then, dive into the Y2K panic that left the world paranoid at the turn of the century.