Where Did Artificial Intelligence Come From?

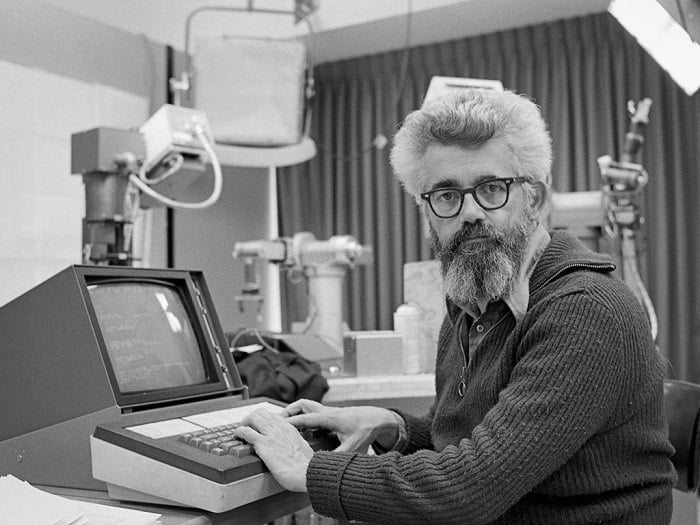

John McCarthy, considered to be the father of artificial intelligence. Image Source: Sirens Of Saturn

Artificial intelligence was a term in use decades before computers were in everyone’s pockets. The modern concept, in fact, dates back to a time when President Eisenhower’s Interstate System was still in the planning stages. The term was first coined in the summer of 1956, at a conference at Dartmouth University. The goals of the conference were clear in the mission statement: “…every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.” Top scientists were invited to discuss AI and two approaches were introduced: pre-programming a computer with the rules of human behavior, and creating something similar to neural networks that stimulate brain cells to learn new behaviors.

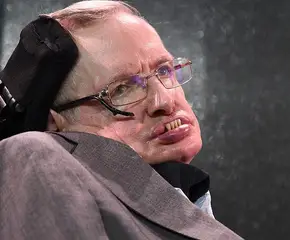

Marvin Minsky, a pioneer in early artificial intelligence, pictured with a robotic arm. Image Source: Valbonne Consulting

Marvin Minsky, who later founded the Artificial Intelligence Laboratory at MIT, and John McCarthy, who organized the conference, were fans of the former approach. The U.S. government was also a fan of that approach, and gave the two significant amounts of money in the hopes that AI could help win the Cold War. For a time, it appeared as if AI was going to happen in the near future, with Minsky predicting as early as 1970 that a machine with the same intelligence of an average human would be invented in the next three to eight years. The reality was far harsher: The government slashed funding (leading to what became known as the “AI winter”), and innovation lagged until 1981, when private businesses picked up where the government had left off.

“I like to just keep an eye on what’s going on with artificial intelligence,” Elon Musk said when questioned about his investment in AI research company Vicarious in 2014. “I think there is a potentially dangerous outcome there. There have been movies about this, you know, like Terminator.”

By 1984, media companies were back to predicting how AI was going to take over and destroy the human race. In the first Terminator movie, out that year, a self-aware Skynet spreads itself to millions of computer servers and, in 1997, tries to destroy the human race by launching nuclear missiles at Russia, prompting them to retaliate by emptying their silos at the U.S. It was a plot straight from everyone’s Cold War nightmares.

A chess program called Deep Blue beat the world chess champion in 1997. Image Source: iDisrupted

In real life, the biggest human-AI conflict of 1997 was staged on a chessboard. In a battle known as “the brain’s last stand,” world chess champion Gary Kasparov took on the supercomputer Deep Blue, which was capable of evaluating up to 200 million positions per second: It handily defeated Kasparov. While far from having the power to take over the world, it was a pivotal moment that showed AI could think strategically on its own (although, importantly, Deep Blue didn’t prove that AI could learn like humans, simply that it could excel at a specific task).

Artificial intelligence expanded exponentially in the 2000s. Self-driving cars, cell phones that double as personal assistants, a chatbot that can fool people into believing it’s a live person and a range of robots that can accomplish specific tasks are all now parts of daily life. But is it possible these harmless helpers are performing a more insidious function, paving the way for humanity to more instinctively trust AI, making us more willing to turn over more vital—and lethal—systems to its control?