Weaponized Artificial Intelligence

Modular Advanced Armed Robotic System (MAARS), human controller not required. Image Source: RT English

Technological advancement has relied on military funding for years. Without the military’s investments, it’s extremely unlikely we would have GPS, computers or the Internet, so it comes as no surprise that military investments are also funding AI research. The trouble is, when robots are armed, a whole different set of standards come into play. It is simply not known what will happen when a number of autonomous weapons systems come together.

One suggested answer to the threat is a programmed set of laws. Most artificial intelligence in science fiction stories lean heavily on Isaac Asimov’s “Three Laws of Robotics”, which are essentially a short set of rules to ensure robots are not capable of harming humans. In reality, however, some of the world’s most advanced robots were created for the sole purpose of harming humans, with drones an especially popular option with the US military.

The Predator drone, an armed drone widely used by the U.S. military. Image Source: Truther.org

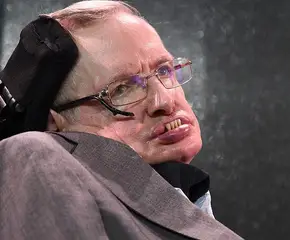

The benefits of a drone are obvious: A single person in the U.S. can attack a compound or individual on the other side of the world without ever putting themselves at risk. But while drones are currently guided by people who make the ultimate decisions, the fear is that one day technology will advance to the point where it can make lethal decisions without human approval. It is this very fear that has caused a group of the world’s leading scientists, known as Future Of Life, to write an open letter warning of the dangers of mixing AI with military hardware. Prominent member Stephen Hawking’s advocations against weaponized AI come largely from a fear of the moment when the creators don’t fully understand the weapons being created. Humans, Hawking argues, evolve too slowly to keep up with AI.

“Humans, who are limited by slow biological evolution, couldn’t compete and would be superseded,” Hawking warns.

The letter also outlines the direction that further AI research should go in order for society to gain the most benefits, covering topics such as law, philosophy and economics.

“The potential benefits are huge, since everything that civilization has to offer is a product of human intelligence; we cannot predict what we might achieve when this intelligence is magnified by the tools AI may provide, but the eradication of disease and poverty are not unfathomable,” the letter reads. “We recommend expanded research aimed at ensuring that increasingly capable AI systems are robust and beneficial: our AI systems must do what we want them to do.”

Physical weapons aren’t the only fear, either. The U.S. saw a preview of how AI could potentially use information as a weapon in the New York Stock Exchange’s “Flash Crash” in 2010. In a matter of 10 minutes, nearly $1 trillion disappeared and returned. It was a bigger collapse than was seen either as a result of 9/11 or the Lehman Brothers’ fall that kicked off the Great Recession.

The culprits were relatively simple versions of AI that were placing and canceling market orders—a complex sequence of automated triggers. Put simply, machines were stuffing the box of the New York Stock Exchange to slow down orders and create price discrepancies that lasted a matter of seconds while the fastest machines bought and sold, making a killing.

https://www.youtube.com/watch?v=tzS008trTcI

The Future Of Life petition, however, continues to focus on physical weapons.

“Autonomous weapons are ideal for tasks such as assassinations, destabilizing nations, subduing populations and selectively killing a particular ethnic group. We therefore believe that a military AI arms race would not be beneficial for humanity.”

The question is, is it already too late to stop an AI arms race?